Microsoft's Next-Gen Cloud Investment Exceeds $60 Billion to Secure AI Computing Power

News Summary

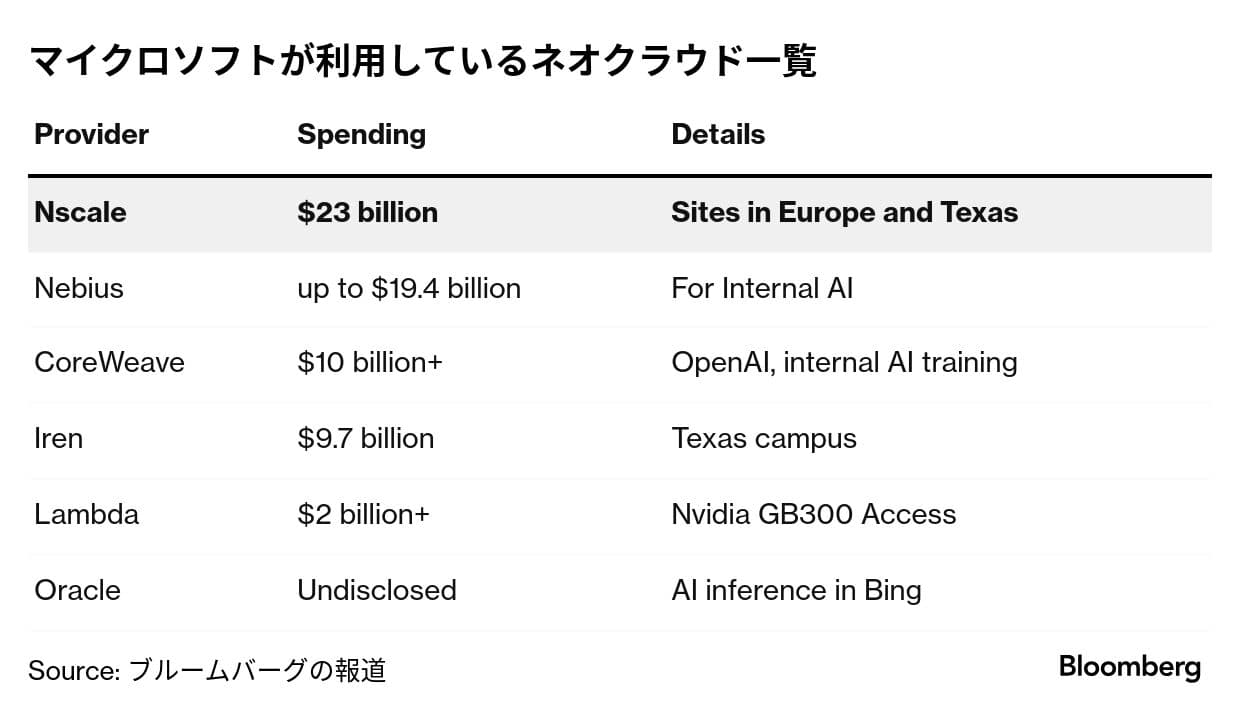

U.S. software giant Microsoft has committed over $60 billion (approximately ¥9.21 trillion) to next-generation cloud (Neocloud) providers, underscoring the urgent need to secure AI computing power. The largest contract, worth about $23 billion, is with UK startup Nscale, which will grant Microsoft access to approximately 200,000 of NVIDIA's latest GB300 chips in facilities across the UK, Norway, Portugal, and Texas. Microsoft, like other software giants, is struggling to build out data centers fast enough to meet both internal AI tool development and customer demand, increasingly relying on smaller infrastructure providers such as CoreWeave and Nebius Group, termed "neoclouds." Microsoft's spending on these neocloud companies has almost doubled since early October. Recently, Microsoft also secured about $9.7 billion in AI cloud capacity from IREN Australia and announced a multi-billion dollar deal with Lambda. Sources indicate many of Microsoft's neocloud agreements are five-year contracts. A Microsoft spokesperson stated that the company's global infrastructure strategy is based on short- and long-term customer demand signals, emphasizing flexibility and choice by combining its own data centers, leased facilities, and third-party providers to quickly respond to global demand. Bloomberg Intelligence analyst Anurag Rana noted that this series of neocloud deals confirms a severe industry-wide capacity shortage driven by the surge in AI demand.

Background

Global demand for AI computing power has surged exponentially due to the rapid development and adoption of AI technologies, particularly large language models (LLMs). This has led to intense competition among tech giants for access to advanced GPUs and data center capacity. Microsoft, as a leading cloud provider with its Azure platform, is a key player in the AI race, investing heavily in AI research and integrating AI capabilities across its product suite. NVIDIA's advanced GPUs, such as the GB300 mentioned in the article, are critical components for AI training and inference, making their acquisition and deployment a strategic imperative for companies like Microsoft. By simultaneously building its own infrastructure and partnering with specialized providers, Microsoft seeks to rapidly scale its computing power to meet burgeoning market demand.

In-Depth AI Insights

Why is Microsoft turning to "neoclouds" rather than solely relying on its own data centers? - Rapid Scaling Needs: AI computing power demand is growing much faster than traditional data center construction cycles allow. Partnering with specialized "neocloud" providers enables Microsoft to more quickly deploy and access the latest AI hardware, bypassing the capital expenditure, land acquisition, and construction bottlenecks of self-building. - Optimized Resource Allocation: Specialized providers can often manage and operate AI-specific hardware (like NVIDIA GB300 chips) more efficiently, offering flexible, on-demand service models. This allows Microsoft to focus more resources on core AI software development and customer relationship management, rather than infrastructure operations. - Risk Mitigation: By diversifying suppliers, Microsoft can reduce its reliance on a single infrastructure supply model, mitigating risks associated with supply chain disruptions or technological upgrade cycles. How does this investment impact the competitive landscape of the AI infrastructure market? - Validation of the "Neocloud" Model: Microsoft's massive investment validates the "neocloud" business model as a crucial complement to AI infrastructure, potentially attracting more capital and new players into this segment, further fragmenting the market. - Intensified Chip Demand Competition: The $23 billion contract for 200,000 GB300 chips highlights extreme reliance on, and fierce competition for, NVIDIA's high-end AI chips. This could further drive up chip prices and push NVIDIA to increase production or accelerate alternative chip development from other manufacturers. - Consolidation of Giant's Advantage: While relying on third parties, Microsoft, through such large-scale procurement, can quickly secure scarce computing power, solidifying its leadership in cloud AI. This puts greater pressure on smaller competitors who may struggle to obtain resources of a similar scale. In the long run, is this outsourced AI computing power model a boon or bane for Microsoft's profitability and strategic control? - Short-term Gains vs. Long-term Costs: In the short term, outsourcing helps Microsoft rapidly meet AI demand, driving growth in its Azure AI services and boosting revenue. However, long-term reliance on third-party providers could mean higher operational costs (leasing rather than owning) and potentially erode its gross margins. - Strategic Control vs. Flexibility: While outsourcing offers flexibility, over-reliance on critical AI infrastructure could diminish Microsoft's full control over underlying hardware configurations, security standards, and future technology roadmaps. This requires a delicate balance between cost-efficiency and strategic autonomy. However, through long-term contracts and potential equity investments, Microsoft can still exert a degree of influence.